Better recall

Hybrid search surfaces relevant memories that pure keyword matching would miss. Ask "what did we decide about the deployment strategy?" and get results even if the word "deployment" never appeared in your notes.

OpenClaw stores conversation history in workspace memory files, but finding the right piece of context at the right time is hard. QMD fixes that by combining keyword matching with semantic vector search — so your assistant recalls what matters, not just what matches a string.

QMD (Query-Memory-Document) is a hybrid retrieval backend for OpenClaw. Instead of relying on a single search strategy, it runs two in parallel:

Results from both channels are merged and re-ranked, giving you the precision of keyword search and the recall of embeddings in a single lookup.

Without QMD, OpenClaw uses its default file-based memory — daily markdown notes and a long-lived MEMORY.md.

That works, but retrieval is limited to whatever the model can fit in context or find through basic text search.

With QMD enabled, your assistant gains:

Hybrid search surfaces relevant memories that pure keyword matching would miss. Ask "what did we decide about the deployment strategy?" and get results even if the word "deployment" never appeared in your notes.

QMD re-indexes your memory files on a 5-minute interval with a 15-second debounce. No manual reindexing, no restarts needed.

When QMD retrieves a memory, it can cite the source document — so you know exactly where a recalled fact came from.

By default, QMD memory is scoped to direct chats only. Group noise stays out of your personal memory index.

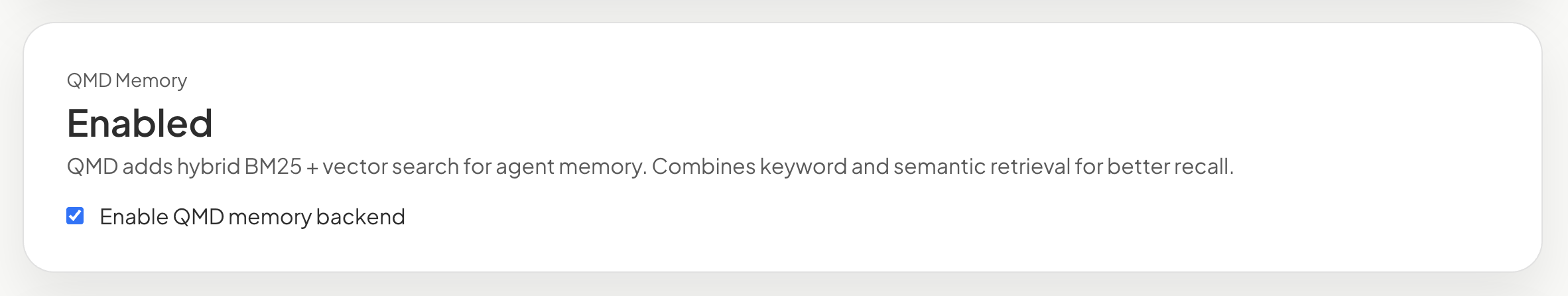

If you're running OpenClaw through OpenClaw Setup, enabling QMD takes one click:

Behind the scenes, OpenClaw Setup generates the following configuration block and injects it into your instance:

memory:

backend: qmd

citations: auto

qmd:

includeDefaultMemory: true

update:

interval: 5m

debounceMs: 15000

limits:

maxResults: 6

timeoutMs: 4000

scope:

default: deny

rules:

- action: allow

match:

chatType: direct

You don't need to touch this YAML yourself — the platform handles it. But if you're self-hosting OpenClaw, you can paste this block into your config.yaml directly.

When true, QMD indexes your existing workspace memory files (MEMORY.md, daily notes) alongside its own vector store. Your old memories are not lost.

How often QMD re-scans and re-indexes memory documents. Default is every 5 minutes — frequent enough to stay current, light enough to avoid overhead.

Maximum number of memory chunks returned per query. Set to 6 by default — enough context without flooding the prompt.

QMD only indexes conversations that match an explicit allow rule. The default setup allows direct messages only, keeping group chat noise out of your memory index.

Once enabled, QMD works transparently. You don't need to change how you talk to your assistant. A few tips to get the most out of it:

MEMORY.md and daily notes, the better retrieval gets.| Feature | Default memory | QMD memory |

|---|---|---|

| Search type | File-based / context window | Hybrid BM25 + vector |

| Semantic matching | No | Yes |

| Auto-indexing | No | Every 5 minutes |

| Citations | No | Auto |

| Scope control | All chats | Configurable (direct-only by default) |

| Setup on OpenClaw Setup | Default | One checkbox |